| Version 69 (modified by , 3 years ago) ( diff ) |

|---|

Table of Contents

LSI MegaRAID SAS

1. Card information

MegaRAID SAS is the current high-end RAID controllers series by LSI.

It is fully hardware RAIDs controllers supporting RAID5, at least, with SAS or SATA interfaces.

If you're a looking for information about MegaRAID SCSI connectors, please look at LSIMegaRAID instead.

All theses card can be used with stock Linux kernel which includes a working driver.

It's quite new and thus, may be missing in some not-up-to-date distributions.

There is currently no known opensource tool for theses cards.

Some old MegaRAID SAS can be used with megactl, but none of current cards works.

However LSI provide megacli, a proprietary management command line utility which is rather hard to use.

2. Linux kernel drivers

| Driver | Supported cards |

| megaraid_sas | LSI MegaRAID SAS |

megaraid_sas is part of mainstream Linux kernel and should be available in all current distributions.

However, please that most of old distributions won't have this driver.

If your card use megaraid_mm or megaraid_mbox driver, please look at LSIMegaRAID instead.

Some lspci -nn output examples:

- 02:0e.0 RAID bus controller [0104]: Dell PowerEdge Expandable RAID controller 5 ![1028:0015]

- 01:00.0 RAID bus controller [0104]: LSI Logic / Symbios Logic MegaRAID SAS 1078 (rev 04) [1000:0060]

- 04:0e.0 RAID bus controller [0104]: LSI Logic / Symbios Logic MegaRAID SAS [1000:0411]

- 03:00.0 RAID bus controller [0104]: LSI Logic / Symbios Logic MegaRAID SAS 2108 [Liberator] [1000:0079] (rev 05)

- 10:00.0 RAID bus controller [0104]: LSI Logic / Symbios Logic MegaRAID SAS TB [1000:005b] (rev 01)

- 01:00.0 RAID bus controller [0104]: LSI Logic / Symbios Logic MegaRAID SAS 2008 [Falcon] [1000:0073] (rev 03) Dell PERC H310 Mini

- 01:00.0 RAID bus controller [0104]: LSI Logic / Symbios Logic MegaRAID SAS 2208 [Thunderbolt] [1000:005b] (rev 05) Dell PERC H710 Mini

3. Management and reporting tools

megactl includes a SAS compliant binary named megasasctl. It seems to work on old card but fails with the new one.

If megasasctl doesn't work for you, you will have to use the proprietary cli utility from LSI: megaclisas.

For managing the card there are no alternatives to megaclisas.

3.1. megactl

Despites megasasctl doesn't seem to work with recent cards, you should really give it a try.

3.1.1. Quickstart and output example

Print current controller status:

server:~# megasasctl a0 PERC 5/i Integrated encl:1 ldrv:1 batt:good a0d0 136GiB RAID 1 1x2 optimal a0e8s0 136GiB a0d0 online a0e8s1 136GiB a0d0 online

[root@server ~]# megasasctl a0 PERC 5/i Integrated encl:1 ldrv:2 batt:good a0d0 67GiB RAID 1 1x2 optimal a0d1 836GiB RAID 5 1x4 optimal a0e8s0 68GiB a0d0 online a0e8s1 68GiB a0d0 online a0e8s2 279GiB a0d1 online a0e8s3 279GiB a0d1 online a0e8s4 279GiB a0d1 online a0e8s5 279GiB a0d1 online

[root@server ~]# megasasctl a0 PERC 6/i Integrated encl:1 ldrv:1 batt:good a0d0 1861GiB RAID 6 1x6 optimal a0e32s0 465GiB a0d0 online a0e32s1 465GiB a0d0 online a0e32s2 465GiB a0d0 online a0e32s3 465GiB a0d0 online a0e32s4 465GiB a0d0 online a0e32s5 465GiB a0d0 online

There are several switches which are interresting:

- -H: Only print lines which are not ok.

If nothing is printer, everything is fine - -B: Ignore batttery problems when running -H.

In fact megasasctl can't define if your controller has a battery or not.

If you don't have one, use this parameter.

3.1.2. Periodic checks

You can write your own script around megasasctl to check your adapter status health periodically. However, I already did this for you. See megaraid-status below.

3.2. megaraid-status

3.2.1. About megaraid-status

megaraidsas-status is a wrapper script around megactl with periodics checks.

It is available in the packages repository too.

The packages comes with a python wrapper around megasasctl and an initscript that periodic run this wrapper to check status.

It keeps a file with latest status and thus is able to detect RAID status changes and/or brokeness.

It will log a ligne to syslog when something failed and will send you a mail.

Until arrays are healthy again a reminder will be sent each 2 hours.

3.2.2. Wrapper output example

server:~# megaraidsas-status -- Arrays informations -- -- ID | Type | Size | Status a0d0 | RAID 1 | 136GiB | optimal -- Disks informations -- ID | Model | Status | Warnings a0e8s0 | SEAGATE ST3146854SS 136GiB | online a0e8s1 | SEAGATE ST3146854SS 136GiB | online

[root@server ~]# megaraidsas-status -- Arrays informations -- -- ID | Type | Size | Status a0d0 | RAID 1 | 67GiB | optimal a0d1 | RAID 5 | 836GiB | optimal -- Disks informations -- ID | Model | Status | Warnings a0e8s0 | FUJITSU MBA3073RC 68GiB | online a0e8s1 | FUJITSU MBA3073RC 68GiB | online a0e8s2 | SEAGATE ST3300656SS 279GiB | online a0e8s3 | SEAGATE ST3300656SS 279GiB | online a0e8s4 | SEAGATE ST3300656SS 279GiB | online a0e8s5 | SEAGATE ST3300656SS 279GiB | online

[root@server ~]# megaraidsas-status -- Arrays informations -- -- ID | Type | Size | Status a0d0 | RAID 6 | 1861GiB | optimal -- Disks informations -- ID | Model | Status | Warnings a0e32s0 | SEAGATE ST3500620SS 465GiB | online a0e32s1 | SEAGATE ST3500620SS 465GiB | online a0e32s2 | SEAGATE ST3500620SS 465GiB | online a0e32s3 | SEAGATE ST3500620SS 465GiB | online a0e32s4 | SEAGATE ST3500620SS 465GiB | online a0e32s5 | SEAGATE ST3500620SS 465GiB | online

3.3. megacli

3.3.1. About megacli

megacli is a proprietary tool by LSI which can perform both reporting and management for MegaRAID SAS cards.

However it's really hard to use because it's use tones of command line parameters and there's no documentation.

3.3.2. Quickstart and output example

Get all adapters status and config:

server:~# megacli -AdpAllInfo -aAll

Adapter #0

==============================================================================

Versions

================

Product Name : PERC 5/i Integrated

Serial No : 12345

FW Package Build: 5.2.1-0067

Mfg. Data

================

Mfg. Date : 00/00/00

Rework Date : 00/00/00

Revision No : @��A

Battery FRU : N/A

Image Versions In Flash:

================

Boot Block Version : R.2.3.12

BIOS Version : MT28-8

MPT Version : MPTFW-00.10.61.00-IT

FW Version : 1.03.40-0316

WebBIOS Version : 1.03-04

Ctrl-R Version : 1.04-019A

[...]

Logical drive 0 on adapter 0 status and type:

server:~# megacli -LDInfo -L0 -a0 Adapter 0 -- Virtual Drive Information: Virtual Disk: 0 (Target Id: 0) Name:raid1 RAID Level: Primary-1, Secondary-0, RAID Level Qualifier-0 Size:237824MB State: Optimal Stripe Size: 64kB Number Of Drives:2 Span Depth:1 Default Cache Policy: WriteBack, ReadAheadNone, Direct, No Write Cache if Bad BBU Current Cache Policy: WriteBack, ReadAheadNone, Direct, No Write Cache if Bad BBU Access Policy: Read/Write Disk Cache Policy: Disk's Default Exit Code: 0x00

Display, disable or enable automatic rebuild on adapter 0:

server:~# megacli -AdpAutoRbld -Dsply -a0 Adapter 0: AutoRebuild is Enabled. Exit Code: 0x00 server:~# megacli -AdpAutoRbld -Dsbl -a0 Adapter 0: AutoRebuild is Disabled. Exit Code: 0x00 server:~# megacli -AdpAutoRbld -Enbl -a0 Adapter 0: AutoRebuild is Enabled. Exit Code: 0x00

Get and modify rebuild rate:

server:~# megacli -AdpGetProp RebuildRate -a0 Adapter 0: Rebuild Rate = 30% Exit Code: 0x00 server:~# megacli -AdpSetProp RebuildRate 60 -a0 Adapter 0: Set rebuild rate to 60% success. Exit Code: 0x00

Show physical disks from first controller:

server:~# megacli -PDList -a0 [...] Enclosure Device ID: 32 Slot Number: 1 Device Id: 1 Sequence Number: 9 Media Error Count: 0 Other Error Count: 0 Predictive Failure Count: 0 Last Predictive Failure Event Seq Number: 0 PD Type: SAS Raw Size: 140014MB [0x11177328 Sectors] Non Coerced Size: 139502MB [0x11077328 Sectors] Coerced Size: 139392MB [0x11040000 Sectors] Firmware state: Rebuild SAS Address(0): 0x5000c5000c8579d1 SAS Address(1): 0x0 Connected Port Number: 1(path0) Inquiry Data: SEAGATE ST3146855SS S5283LN6CNGM Foreign State: None

We can see that disk 32,1 (enclosure id = 32, slot = 1) is currently rebuilding (firmware state).

Let's check this operation progress:

server:~# megacli -PDRbld -ShowProg -PhysDrv [32:1] -aALL Rebuild Progress on Device at Enclosure 32, Slot 1 Completed 51% in 10 Minutes.

Configure coercion (limit disk size to maximize compatibility with different vendors)

All 4 Tb drives do not have the exact same size so it's recommend to use coercion feature to floor the actual size a bit to maximize compatibiliy.

Get current value:

server:~# megacli -AdpGetProp CoercionMode -a0 Adapter 0: Coercion Mode : Disabled

Available values are 0 for disabled, 1 to floor at 128Mb, 2 to floor at 1Gb, I personnally recommend going for 1G:

server:~# megacli -AdpSetProp CoercionMode 2 -a0 Adapter 0: Set Coercion Mode to 2 success. server:~# megacli -AdpGetProp CoercionMode -a0 Adapter 0: Coercion Mode : 1GB

Create a RAID6 array with megacli

Let's assume we have a server with two MegaRAID SAS cards. The first one is already setup but we have just plugged a disk bays on the second card.

List physical disks on second card (only print enclosure and slots numbers):

server:~# megacli -PDlist -a1 | grep -e '^Enclosure Device ID:' -e '^Slot Number:' Enclosure Device ID: 0 Slot Number: 0 Enclosure Device ID: 0 Slot Number: 1 Enclosure Device ID: 0 Slot Number: 2 Enclosure Device ID: 0 Slot Number: 3 Enclosure Device ID: 0 Slot Number: 4 Enclosure Device ID: 0 Slot Number: 5 Enclosure Device ID: 0 Slot Number: 6

Now we have all enclosure and slot number. Let's create the new array:

server:~# megacli -CfgLdAdd -r6 [0:0,0:1,0:2,0:3,0:4,0:5,0:6] -a1 Adapter 1: Created VD 0 Adapter 1: Configured the Adapter!! Exit Code: 0x00

Read Cache, Write Cache, ReadAhead and Battery

A quick section about performance tunning....

Let's enable Read Cache, and always cache data:

server:~# megacli -LDSetProp -Cached -LAll -aAll Set Cache Policy to Cached on Adapter 0, VD 0 (target id: 0) success Set Cache Policy to Cached on Adapter 1, VD 0 (target id: 0) success

Enable disks' cache:

server:~# megacli -LDSetProp EnDskCache -LAll -aAll Set Disk Cache Policy to Enabled on Adapter 0, VD 0 (target id: 0) success Set Disk Cache Policy to Enabled on Adapter 1, VD 0 (target id: 0) success

About ReadAhead: this feature will read more stuff and store in the cache, guessing the system may access it soon.

We're going to enable an enhanced version of readahead: the adaptative one.

With this option, readahead will only be enabled if the controller receive several access to sequencial sectors. If not, it won't be used to avoid filling cache with randon useless data (in case of randomly accessed sector).

server:~# megacli -LDSetProp ADRA -LALL -aALL Set Read Policy to Adaptive ReadAhead on Adapter 0, VD 0 (target id: 0) success Set Read Policy to Adaptive ReadAhead on Adapter 1, VD 0 (target id: 0) success

It seems ADRA is deprecated, current megacli binary doesn't offer this option anymore. Use regular readahead instead:

server:~# megacli -LDSetProp RA -LALL -aALL

Set Read Policy to ReadAhead on Adapter 0, VD 0 (target id: 0) success

Now we're going to enable write cache. Beware of data loss! Write cache should be enabled ONLY if you have a battery pack on your controller.

Let's check if we have one and if it's working fine:

server:~# megacli -AdpBbuCmd -GetBbuStatus -a0 | grep -e '^isSOHGood' -e '^Charger Status' -e '^Remaining Capacity' Charger Status: Complete Remaining Capacity: 1445 mAh isSOHGood: Yes server:~# megacli -AdpBbuCmd -GetBbuStatus -a1 | grep -e '^isSOHGood' -e '^Charger Status' -e '^Remaining Capacity' Charger Status: Complete Remaining Capacity: 1353 mAh isSOHGood: Yes

Both adapters have one in this server, let's enable write cache:

server:~# megacli -LDSetProp WB -LALL -aALL Set Write Policy to WriteBack on Adapter 0, VD 0 (target id: 0) success Set Write Policy to WriteBack on Adapter 1, VD 0 (target id: 0) success

But disable it if the battery went broken or discharged:

server:~# megacli -LDSetProp NoCachedBadBBU -LALL -aALL Set No Write Cache if bad BBU on Adapter 0, VD 0 (target id: 0) success Set No Write Cache if bad BBU on Adapter 1, VD 0 (target id: 0) success

Now we can check if everythin and fine and reboot the server (not sure if it's needed):

server:~# megacli -LDInfo -LAll -aAll Adapter 0 -- Virtual Drive Information: Virtual Disk: 0 (Target Id: 0) [...] Default Cache Policy: WriteBack, ReadAdaptive, Cached, No Write Cache if Bad BBU Current Cache Policy: WriteBack, ReadAdaptive, Cached, No Write Cache if Bad BBU Access Policy: Read/Write Disk Cache Policy: Enabled Encryption Type: None Adapter 1 -- Virtual Drive Information: Virtual Disk: 0 (Target Id: 0) [...] Default Cache Policy: WriteBack, ReadAdaptive, Cached, No Write Cache if Bad BBU Current Cache Policy: WriteBack, ReadAdaptive, Cached, No Write Cache if Bad BBU Access Policy: Read/Write Disk Cache Policy: Enabled Encryption Type: None

References (extracted from Dell OpenManage doc):

Read Policy: The read policies indicate whether or not the controller should read sequential sectors of the logical drive when seeking data.

- Read-Ahead. When using read-ahead policy, the controller reads sequential sectors of the logical drive when seeking data. Read-ahead policy may improve system performance if the data is actually written to sequential sectors of the logical drive.

- No-Read-Ahead. Selecting no-read-ahead policy indicates that the controller should not use read-ahead policy.

- Adaptive Read-Ahead. When using adaptive read-ahead policy, the controller initiates read-ahead only if the two most recent read requests accessed sequential sectors of the disk. If subsequent read requests access random sectors of the disk, the controller reverts to no-read-ahead policy. The controller continues to evaluate whether read requests are accessing sequential sectors of the disk, and can initiate read-ahead if necessary.

Write Policy: The write policies specify whether the controller sends a write-request completion signal as soon as the data is in the cache or after it has been written to disk.

- Write-Back. When using write-back caching, the controller sends a write-request completion signal as soon as the data is in the controller cache but has not yet been written to disk. Write-back caching may provide improved performance since subsequent read requests can more quickly retrieve data from the controller cache than they could from the disk. Write-back caching also entails a data security risk, however, since a system failure could prevent the data from being written to disk even though the controller has sent a write-request completion signal. In this case, data may be lost. Other applications may also experience problems when taking actions that assume the data is available on the disk.

- Write-Through. When using write-through caching, the controller sends a write-request completion signal only after the data is written to the disk. Write-through caching provides better data security than write-back caching, since the system assumes the data is available only after it has been safely written to the disk.

Cache Policy: The Direct I/O and Cache I/O cache policies apply to reads on a specific virtual disk. These settings do not affect the read-ahead policy. The cache policies are as follows:

- Cache I/O. Specifies that all reads are buffered in cache memory.

- Direct I/O. Specifies that reads are not buffered in cache memory. When using direct I/O, data is transferred to the controller cache and the host system simultaneously during a read request. If a subsequent read request requires data from the same data block, it can be read directly from the controller cache. The direct I/O setting does not override the cache policy settings. Direct I/O is also the default setting.

Rebuilding a disk by hand when it doesn't occur automatically

I noticed that strange behavior on an IBM controller. Unplugging and pluging back a disk from an array doesn't make the controller rebuild the array with that disk.

Here is what to do:

server:~# megacli -PDlist -a0 [...] Enclosure Device ID: 252 Slot Number: 4 Device Id: 3 [...] Firmware state: Unconfigured(bad) [...] Secured: Unsecured Locked: Unlocked Foreign State: Foreign Foreign Secure: Drive is not secured by a foreign lock key Device Speed: 6.0Gb/s Link Speed: 3.0Gb/s Media Type: Hard Disk Device [...]

The disk drive identified as ![252:4] ([enclosureid:slotnumber]) is currently 'Unconfigured(bad)'.

Make the drive online again:

server:~# megacli -PDMakeGood -PhysDrv[252:4] -a0 Adapter: 0: EnclId-252 SlotId-4 state changed to Unconfigured-Good.

The controller will now recognise the disk as being a "foreign" one. It means it has detected some RAID informations on it, and thus, considers it as a disk being part of an array that may be imported into current controller configuration.

We will now ask the controller to scan for foreign configuration and drop it:

server:~# megacli -CfgForeign -Scan -a0 There are 1 foreign configuration(s) on controller 0. server:~# megacli -CfgForeign -Clear -a0 Foreign configuration 0 is cleared on controller 0.

The disk should now be available for getting back into the array.

Let's check it:

server:~# megacli -PDList -a0 [...] Enclosure Device ID: 252 Slot Number: 4 [...] Firmware state: Unconfigured(good), Spun Up Foreign State: None [...]

We now need to figure out how that disk was identified inside the RAID array:

server:~# megacli -CfgDsply -a0 [...] DISK GROUPS: 1 Number of Spans: 1 SPAN: 0 Span Reference: 0x01 Number of PDs: 4 Number of VDs: 1 Number of dedicated Hotspares: 0 Virtual Disk Information: Virtual Disk: 0 (Target Id: 1) [...] Physical Disk: 2 Physical Disk: 3 Enclosure Device ID: 252 Slot Number: 5 Device Id: 4 [...]

Here is what's important here:

Span Reference: 0x01 is the number of the array (strip the 0x0 part).

We can see that Physical Disk: 2 has no information, which means the drive is missing.

Now we have all we need to add the disk back into the array.

Get the disk ![252:4] back into array 1, as disk 2:

server:~# megacli -PdReplaceMissing -PhysDrv[252:4] -array1 -row2 -a0 Adapter: 0: Missing PD at Array 1, Row 2 is replaced

And finally start rebuilding:

server:~# megacli -PDRbld -Start -PhysDrv[252:4] -a0 Started rebuild progress on device(Encl-252 Slot-4)

Expand an array over an additionnal disk

Thanks to a co-worker, I have now a quick howto.

Assuming your new unassigned drive is identified as ![252:3] and you have a RAID5 array identified as L0 (See documentation above to figure out how to find this).

Reconfigure the array to add this new drive:

server ~ # megacli -LDRecon -Start -r5 -Add -PhysDrv[252:3] -L0 -a0 Start Reconstruction of Virtual Drive Success. Exit Code: 0x00

Check operation progress:

server ~ # megacli -LDInfo -L0 -a0 Adapter 0 -- Virtual Drive Information: Virtual Drive: 0 (Target Id: 0) [...] Ongoing Progresses: Reconstruction : Completed 40%, Taken 163 min. [...]

3.3.3. Periodic checks

You can write your own script around megacli to check your adapter status health periodically. However, I already did this for you. See megaclisas-status below.

Full documentation

A complete documentation is attached as PDF here: raw-attachment:megacli_user_guide.pdf

3.4. megaclisas-status

3.4.1. About megaclisas-status

megaclisas-status is a wrapper script around megacli that report summarized RAID status with periodic checks feature.

It is available in the packages repository too.

The packages comes with a python wrapper around megacli and an initscript that periodic run this wrapper to check status.

It keeps a file with latest status and thus is able to detect RAID status changes and/or brokeness.

It will log a ligne to syslog when something failed and will send you a mail.

Until arrays are healthy again a reminder will be sent each 2 hours.

3.4.2. Wrapper output example

server:~# megaclisas-status -- Controller information -- -- ID | H/W Model | RAM | Temp | BBU | Firmware c0 | PERC H700 Integrated | 512MB | N/A | Good | FW: 12.10.6-0001 -- Array information -- -- ID | Type | Size | Strpsz | Flags | DskCache | Status | OS Path | InProgress c0u0 | RAID-1 | 931G | 64 KB | ADRA,WB | Enabled | Optimal | /dev/sda | None c0u1 | RAID-1 | 1090G | 64 KB | ADRA,WB | Enabled | Optimal | /dev/sdb | None -- Disk information -- -- ID | Type | Drive Model | Size | Status | Speed | Temp | Slot ID | LSI Device ID c0u0p0 | HDD | SEAGATE ST91000640SS AS029XG09DFC | 931.0 GB | Online, Spun Up | 6.0Gb/s | 25C | [32:0] | 0 c0u0p1 | HDD | SEAGATE ST91000640SS AS029XG09AXW | 931.0 GB | Online, Spun Up | 6.0Gb/s | 26C | [32:1] | 1 c0u1p0 | HDD | SEAGATE ST1200MM0017 0002S3L062CK | 1.090 TB | Online, Spun Up | 6.0Gb/s | 25C | [32:2] | 2 c0u1p1 | HDD | SEAGATE ST1200MM0017 0001S3L03T0C | 1.090 TB | Online, Spun Up | 6.0Gb/s | 26C | [32:3] | 3

Another example (I broke the raid by running "megacli -PDOffline -PhysDrv [32:0] -a0")

server:~# megaclisas-status -- Controller information -- -- ID | H/W Model | RAM | Temp | BBU | Firmware c0 | PERC H730 Mini | 1024MB | 56C | Good | FW: 25.3.0.0016 -- Array information -- -- ID | Type | Size | Strpsz | Flags | DskCache | Status | OS Path | InProgress c0u0 | RAID-1 | 3637G | 64 KB | RA,WB | Default | Degraded | /dev/sde | None -- Disk information -- -- ID | Type | Drive Model | Size | Status | Speed | Temp | Slot ID | LSI Device ID c0u0p0 | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GHSE | 3.637 TB | Offline | 6.0Gb/s | 34C | [32:0] | 0 c0u0p1 | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GJ8X | 3.637 TB | Online, Spun Up | 6.0Gb/s | 35C | [32:1] | 1 -- Unconfigured Disk information -- -- ID | Type | Drive Model | Size | Status | Speed | Temp | Slot ID | LSI Device ID c0uXpY | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GZ1T | 3.637 TB | JBOD | 6.0Gb/s | 33C | [32:2] | 2 c0uXpY | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GXRW | 3.637 TB | JBOD | 6.0Gb/s | 32C | [32:3] | 3 c0uXpY | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GGR6 | 3.637 TB | JBOD | 6.0Gb/s | 32C | [32:4] | 4 c0uXpY | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GJFC | 3.637 TB | JBOD | 6.0Gb/s | 31C | [32:5] | 5 There is at least one disk/array in a NOT OPTIMAL state.

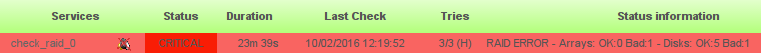

The nagios mode (run with --nagios):

server:~# megaclisas-status --nagios RAID ERROR - Arrays: OK:0 Bad:1 - Disks: OK:5 Bad:1 server:~# echo $? 2

The same example but with missing disk rebuilding (ran "megacli -PDRbld -Start -PhysDrv [32:0] -a0" for this example):

server:~# megaclisas-status -- Controller information -- -- ID | H/W Model | RAM | Temp | BBU | Firmware c0 | PERC H730 Mini | 1024MB | 56C | Good | FW: 25.3.0.0016 -- Array information -- -- ID | Type | Size | Strpsz | Flags | DskCache | Status | OS Path | InProgress c0u0 | RAID-1 | 3637G | 64 KB | RA,WB | Default | Degraded | /dev/sde | None -- Disk information -- -- ID | Type | Drive Model | Size | Status | Speed | Temp | Slot ID | LSI Device ID c0u0p0 | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GHSE | 3.637 TB | Rebuilding (1%) | 6.0Gb/s | 35C | [32:0] | 0 c0u0p1 | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GJ8X | 3.637 TB | Online, Spun Up | 6.0Gb/s | 37C | [32:1] | 1 -- Unconfigured Disk information -- -- ID | Type | Drive Model | Size | Status | Speed | Temp | Slot ID | LSI Device ID c0uXpY | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GZ1T | 3.637 TB | JBOD | 6.0Gb/s | 36C | [32:2] | 2 c0uXpY | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GXRW | 3.637 TB | JBOD | 6.0Gb/s | 34C | [32:3] | 3 c0uXpY | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GGR6 | 3.637 TB | JBOD | 6.0Gb/s | 36C | [32:4] | 4 c0uXpY | HDD | SEAGATE ST4000NM0023 GS10Z1Z9GJFC | 3.637 TB | JBOD | 6.0Gb/s | 34C | [32:5] | 5 There is at least one disk/array in a NOT OPTIMAL state.

A server with two controllers (old script version output, sorry got no such hardware to get a newer example): The first one with a RAID1 array working fine. A second one with a RAID6 arrays of 7 drives with one offline (the array has just been created so it's under initialization too).

server:~# megaclisas-status -- Controller informations -- -- ID | Model c0 | PERC 6/i Integrated c1 | PERC 6/E Adapter -- Arrays informations -- -- ID | Type | Size | Status | InProgress c0u0 | RAID1 | 69G | Optimal | None c1u0 | RAID6 | 3574G | Partially Degraded | Background Initialization: Completed 0%, Taken 2 min. -- Disks informations -- ID | Model | Status c0u0p0 | SEAGATE ST373455SS S5283LQ44AGP | Online c0u0p1 | SEAGATE ST373455SS S5283LQ44ELN | Online c1u0p0 | SEAGATE ST3750630SS MS049QK1DQWD | Online c1u0p1 | SEAGATE ST3750630SS MS049QK1DQT9 | Online c1u0p2 | SEAGATE ST3750630SS MS049QK11NJY | Online c1u0p3 | SEAGATE ST3750630SS MS049QK1DQM5 | Online c1u0p4 | Unknown | Offline c1u0p5 | SEAGATE ST3750630SS MS049QK1DQNV | Online c1u0p6 | SEAGATE ST3750630SS MS049QK1DQX0 | Online There is at least one disk/array in a NOT OPTIMAL state.

3.4.3. Nagios integration

The script can be called with --nagios parameter. It will force a single line output and will return exit code 0 if all good, or 2 if at least one thing is wrong. It's the standard nagios expected return code.

You probably want to run the script through NRPE, define the command like this:

In /etc/nagios/nrpe.d/00_check_raid.cfg

command[check_raid]=/usr/bin/sudo /usr/sbin/megaclisas-status --nagios

You also need a sudo config file, in /etc/sudoers.d/00-check-raid

nagios ALL=(root) NOPASSWD:/usr/sbin/megaclisas-status --nagios

Then you can expect such monitoring facilities (centreon on-top centreon-engine in this picture):

3.5. About /dev/megaraid_sas_ioctl_node

All theses tools requires this device node to be created.

It has to be done by hand.

Proprietary tools creates the device node at startup.

I made some wrappers around binaries from megactl package to create the node if it doesn't exist yet.

4. SMART

Finally I found the way to read SMART through these MegaRAID cards. The first thing you'll have to do is to list IDs of all your physical disks:

server:~# megacli -PDlist -a0 | grep '^Device Id:' Device Id: 0 Device Id: 1 Device Id: 2 Device Id: 3

Then you can add this kind of lines to /etc/smartd.conf (don't forget to comment the DEVICESCAN one):

# LSI MegaRAID /dev/sda -d sat+megaraid,0 -a -s L/../../3/02 /dev/sda -d sat+megaraid,1 -a -s L/../../3/03 /dev/sda -d sat+megaraid,2 -a -s L/../../3/04 /dev/sda -d sat+megaraid,3 -a -s L/../../3/05

Please note you need a recent version of smartmontools. 5.38 from Debian Lenny won't work, 5.39.1+svn3124 from Squeeze does.

5. BIOS upgrade from a Linux system

Dell cards can be flashed using firmware-tools.

See http://linux.dell.com/wiki/index.php/Repository/firmware for more informations.

However this will only work on RedHat, CentOS, SuSE and Fedora. Even Ubuntu is listed in the wikipage, LSI card upgrade is not supported.

We use a Fedora 8 nfsroot booted by PXE to update our Dell's firmware running Debian.

Michael reported a firmware can be flashed using megacli with the following syntax:

megacli -adpfwflash -f mr2208fw.rom -a0

I haven't done it by myself but I sure it works.

Attachments (2)

-

megacli_user_guide.pdf

(4.6 MB

) - added by 13 years ago.

Megacli User Guide PDF

-

centreon_check_raid.png

(7.8 KB

) - added by 9 years ago.

centreon_check_raid.png